Video Super-Resolution Project |

1Chih-Chun Hsu, 1Chia-Wen Lin, and 2Li-Wei Kang |

1Department of Electrical Engineering National Tsing Hua University Hsinchu 30013, Taiwan 2Department of Computer Science and Information Engineering National Yunlin University of Science and Technology Yunlin 640 , Taiwan |

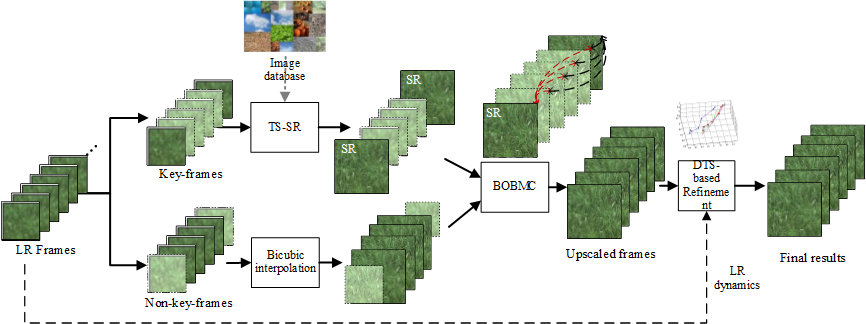

Fig. 1. Block diagram of the proposed

DTS-SR framework.

The key-frames are first uniformly sampled from the LR input video with an

interval of

|

This paper addresses the problem of hallucinating the missing

high-resolution (HR) details of a low-resolution (LR) video while

maintaining the temporal coherence of the reconstructed HR details by

using dynamic texture synthesis (DTS). Most existing multi-frame-based

video super-resolution (SR) methods suffer from the problem of limited

reconstructed visual quality due to inaccurate sub-pixel motion

estimation between frames in a LR video. To achieve high-quality

reconstruction of HR details for a LR video, we propose a

texture-synthesis-based video SR method,

as shown in Fig. 1,

in which a novel DTS scheme is proposed to render the reconstructed HR

details in a temporally coherent way, which effectively addresses the

temporal incoherence problem caused by traditional texture synthesis

based image SR methods. To further reduce the complexity of the proposed

method, our method only performs the texture synthesis-based SR (TS-SR)

on a set of key-frames, while the HR details of the remaining

non-key-frames are simply predicted using the bi-directional overlapped

block motion compensation. After all frames are upscaled, the proposed

DTS-SR is applied to maintain the temporal coherence in the HR video.

Experimental results demonstrate that the proposed method achieves

significant subjective and objective visual quality improvement over

state-of-the-art video SR methods. |

Main menu

Data

Experimental Result

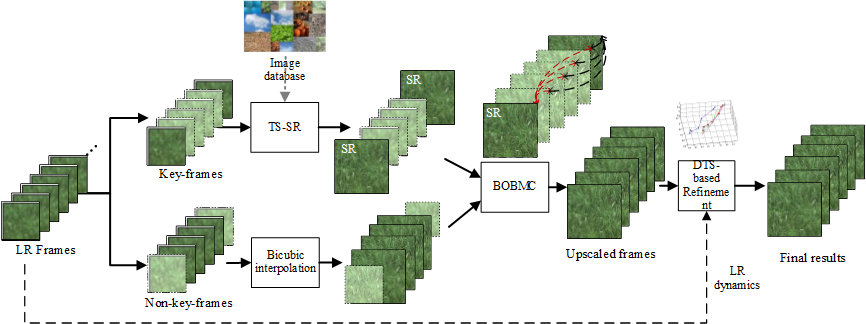

Fig. 2.

Block diagram of proposed DTS-based refinement.

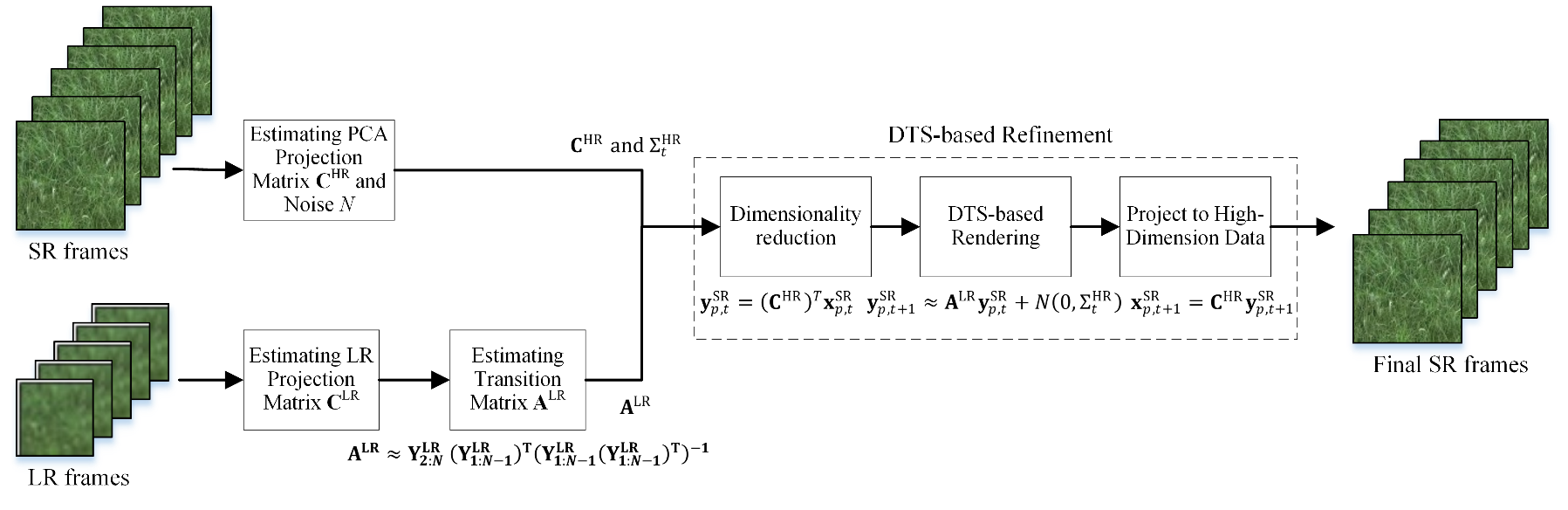

Fig. 3. Examples of low-dimensional trajectories projected from the ground-truth HR textural patches (red), its downscaled LR patches (pink), the HR patches upscaled from the LR patches via TS-SR+BOBMC (blue), and the SR patches via the proposed DTS-SR method (green), respectively. The three axes, PC-I, PC-II, and PC-III indicate the first, second, and third principal components of a video patch projected using PCA.

Fig.

2

depicts the proposed

DTS-based refinement scheme, in which both the input LR frames and the

reconstructed HR frames obtained via hybrid TS-SR/BOBMC are used to derive

temporally coherent HR video frames. Our

method is based on the assumption that

the content of a textural patch varies along time and the transition between the

textures can be modeled as a linear or nonlinear system [21]-[23],

[34].

Fig.

3 shows the four projected

trajectories of the ground-truth HR patches, the downscaled LR patches, the SR

patches via hybrid TS-SR/BOBMC, and the SR patches via the proposed DTS-SR method (i.e., hybrid

TS-SR/BOBMC followed by DTS-based refinement), respectively. We can observe from

Fig. 5 that the trajectory of the SR patches obtained via DST-SR is much

closer to the ground-truth trajectory compared to that of the SR patches

obtained via hybrid TS-SR/BOBMC. As a

result, the proposed DTS-SR method can well address the temporal incoherence

problem in video SR which can also be observed from the

SR videos.

See video demo pages to get

more information.

Fig. 4. Objective visual qualities evaluated by MOVIE for the eight reconstructed SR Videos with different interval lengths between two successive key-frames (KFs).

Fig. 5. Run-time complexity (in seconds) comparison of the proposed SR method for the eight test videos with different interval lengths between two successive key-frames (KFs).

Note, the selection of the

number of non-key-frames between two successive key-frames will influence both

the visual quality and computational complexity. Fig.

4 compares the visual

qualities of reconstructed HR videos using our method with different values of

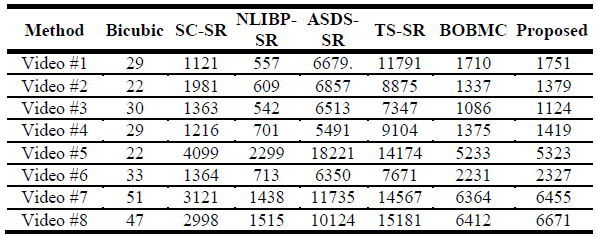

Table 1: Rum-Time (in Seconds) Comparisons among the Evaluated Methods Used for Comparisons and the Proposed Method.

The proposed method was implemented in MATLAB 64-bit version with Windows 8.1 operation system on a personal computer equipped with Intel i7 processor and 32 GB memory. To evaluate the computational complexity of the proposed algorithm, the run-time of each compared method is listed in Table 1, which shows that the proposed method is significantly faster than ASDS-SR and TS-SR.

[19]

E. M. Hung, R. L. de Queiroz, F. Brandi, K. F. de Oliveira, and D. Mukherjee,

“Video super-resolution using codebooks derived from key-frames,”

IEEE Trans. Circuits Syst. Video

Technol.

vol. 22, no. 9, pp. 1321–1331, Sept. 2012.

[21]

A.

Schödl, R.

Szeliski, D.

Salesin,

and I.

A. Essa,

“Video

textures,”

in Proc.

ACM SIGGRAPH,

New Orleans, LA, USA, July 2000,

pp.

489−498.

[22]

G.

Doretto, A.

Chiuso, Y.

N.

Wu,

and S.

Soatto,

“Dynamic

textures,”

Int.

J.

Comput. Vis.,

vol. 51,

no. 2,

pp. 91−109,

2003.

[26]

Y. HaCohen, R. Fattal, and D. Lischinski, “Image upsampling via texture

hallucination,” in Proc.

IEEE Int.

Conf.

Comput. Photography,

Cambridge,

MA,

USA, pp. 20−30,

Mar.

2010.

[34]

R. Costantini, L. Sbaiz, and S. Süsstrunk, ”Higher order SVD analysis for

dynamic texture synthesis, IEEE Trans. Image Process., vol. 17, no. 1, pp. 42–52, Jan. 2008.

| See Also: |