Video Super-Resolution Project

|

1Chih-Chun Hsu, 1Chia-Wen Lin, and Li-Wei Kang |

1Department of Electrical Engineering National Tsing Hua University Hsinchu 30013, Taiwan |

In

order to subjectively evaluate the performance of the proposed SR method, we

conduct a paired comparison-based subjective user study [32].

We invited 20 subjects to join the experiments, where each subject was given two

side-by-side SR videos obtained by two different evaluated SR methods (in a

random order) at a time, and was asked to choose their preference from the two

SR videos in terms of visual quality, temporal coherence, and details

reconstruction, respectively. The visual quality is based on subjective user

preference. Moreover, the temporal coherence is used to evaluate the temporal

consistency in the SR videos, whereas the details restoration is designed to

evaluate the performance of the ability of the HR details recovery from LR

videos. The 20 subjects include 13 males and 7 females, whose ages ranging from

21 to 31, without knowledge of the evaluated SR methods. The device used to

display these SR videos was a full-HD 23-inch LCD display with color temperature

4300K.

In our

subjective experiments, we compare the proposed method with Bicubic, SC-SR,

NLIBP-SR, ASDS-SR, and TS-SR for the four test videos. Each SR method is

pairwise compared with the others by totally 5 (methods),

4

(test videos)

´

20 (subjects) = 400 times, implying that 80 comparisons are made between every

two methods for the four test videos. To quantify the subjective evaluation

results, we calculate the winning frequency matrix

[wij]

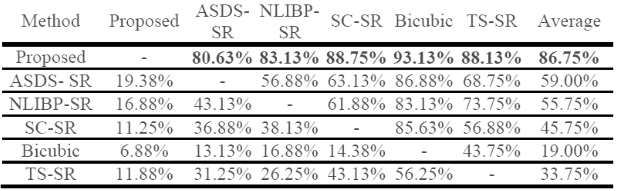

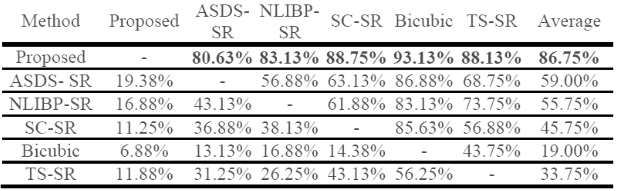

Table 1: Subjective "Visual Quality" Evaluation by Paired Comparisons (in Relative Winning Percentage) for the Four Reconstructed HR Videos Obtained Using the Proposed, ASDS-SR [11], NLIBP-SR [6], SC-SR [9], Bicubic [3], and TS-SR [26] Methods.

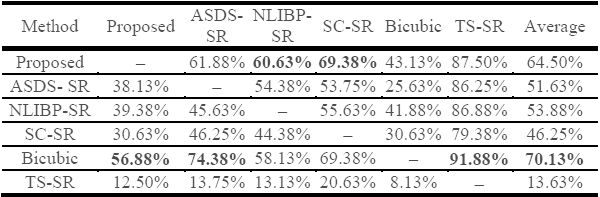

Table 2: Subjective "Temporal Coherence" Evaluation by Paired Comparisons (in Relative Winning Percentage) for the Four Reconstructed HR Videos Obtained Using the Proposed method, ASDS-SR, NLIBP-SR, SC-SR, Bicubic, and TS-SR.

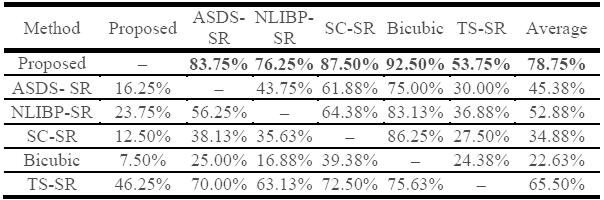

Table 3: Subjective “Details Reconstruction” Evaluation by Paired Comparisons (in Relative Winning Percentage) for the Four Reconstructed HR Videos Obtained Using the Proposed method, ASDS-SR, NLIBP-SR, SC-SR, Bicubic, and TS-SR.

Table 1~3 show that the proposed method perform the best subjectively in visual quality and details reconstruction, and the second best in temporal coherence based on the subjective quality evaluation criterion proposed in [32]. Note, Table 2 shows that the Bicubic method outperforms the others in temporal coherence, which is the only item in which our method does not perform the best. The main reason is that the bicubic method is simply based on interpolation, where only the pixel values within the LR version of an image itself are used for SR and the interpolation scheme is temporally consistent, thereby resulting in better temporal consistency while leading to poor performance in both visual quality and details reconstruction.

References

[6]

W. Dong, L. Zhang, G. Shi, and X. Wu, “Nonlocal back-projection for adaptive

image enlargement,” in Proc. IEEE Int. Conf.

Image Process.,

Cairo, Egypt,

Nov. 2009, pp. 349−352.

[9]

J. Yang, J. Wright, T. Huang, and Y. Ma, “Image super-resolution via sparse

representation,” IEEE Trans. Image

Process., vol. 19,

no. 11, pp. 2861–2873,

Nov. 2010.

[11]

W. Dong, L. Zhang, G. Shi, and X. Wu, “Image deblurring and super-resolution by

adaptive sparse domain selection and adaptive regularization,”

IEEE Trans. Image Process., vol. 20,

no. 7, pp. 1838−1857,

July 2011.

[26]

Y. HaCohen, R. Fattal, and D. Lischinski, “Image upsampling via texture

hallucination,” in Proc.

IEEE Int.

Conf.

Comput. Photography,

Cambridge,

MA,

USA, pp. 20−30,

Mar.

2010.

[32]

J.-S. Lee, “On designing paired comparison experiments for subjective

multimedia quality assessment,” IEEE

Trans. Multimedia, vol. 16, no. 2, pp. 564–571, Feb. 2014.